Given that we want to execute certain service tasks in Camunda directly, is there a noticeable difference in performance when using different implementations?

What is Camunda? And what is a Service Task

For those unfamiliar with the platform, Camunda is an open-sourced engine that allows you to design processes using visual diagrams. These visual diagrams are represented using a specification called BPMN, or Business Process Model Notation.

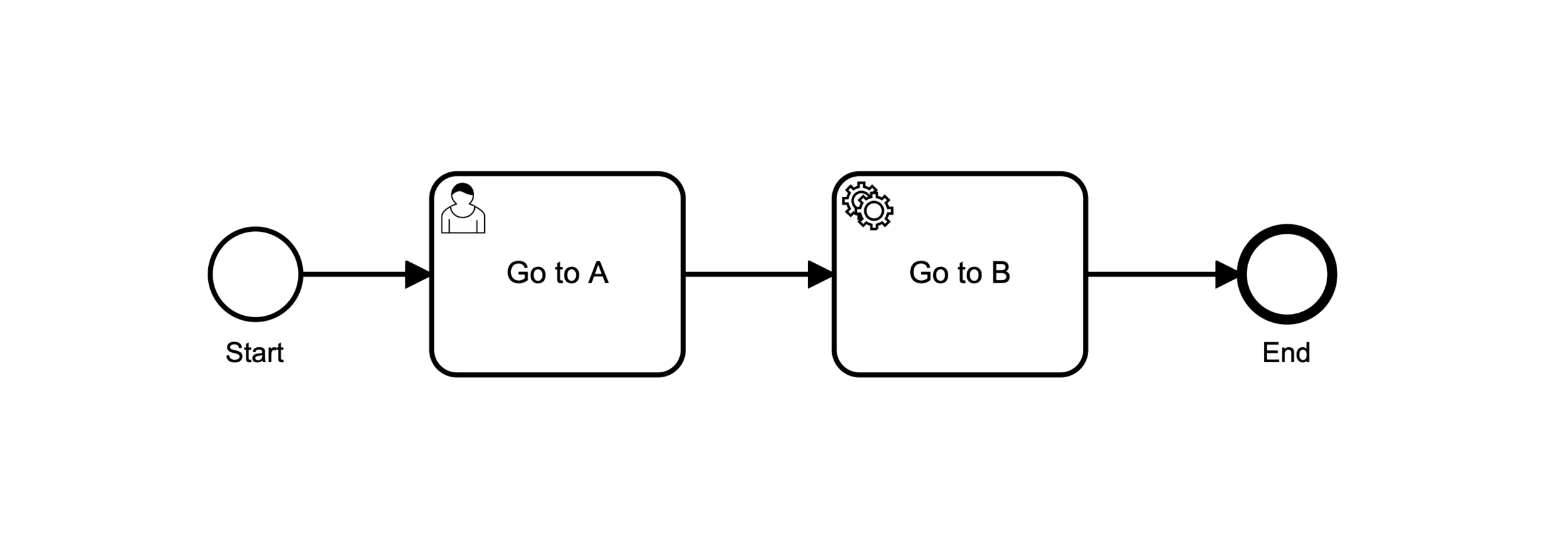

BPMN Diagrams provide an alternative to designing and implementing processes in code. An example of a BPMN Diagram is as follows.

There are several different task types in Camunda, each of which serve different purposes, and hence have different functionality. For example, user tasks are meant to represent an interaction point with a user, coming with several essential features that support that function, like assignments.

The rectangular box in the diagram with the little “person” icon on the top-left represents a user task.

Notice the little gear icon in one of the boxes?

That represents a service task. Service tasks are usually where custom code is implemented. It is possible, for instance to write code that makes HTTP REST calls to an external service, and save the results to the Camunda engine.

Camunda itself doesn’t deal with such functionality – which makes sense because Camunda is primarily a workflow engine. Instead of trying to deal with the myriad ways possible of handling network calls, it chooses to let the user define these in code.

The experiment

There are different types of service task implementations available to the Camunda BPMN designer / engineer. We want to examine the differences between two of these: the Java Class implementation, and the External Task Client implementation.

The Java Class implementation is straightforward – because Camunda is written in Java, we can define Java Classes in the Camunda project structure, and expose these to Camunda for use. These classes compile together with the rest of the Camunda project, before deployment, and are accessed directly by the Camunda Engine.

The External Task Client is a little indirect – it relies on the REST APIs exposed by Camunda, and a long polling method to pick up tasks based on a “topic name”, essentially letting workers outside of the Camunda platform handle task processing. For the purposes of this experiment, we use the Camunda External Task Client JS library, which runs on NodeJS.

We evaluate these against 2 kinds of tasks, a basic one, and a more advanced one that uses much higher processing power.

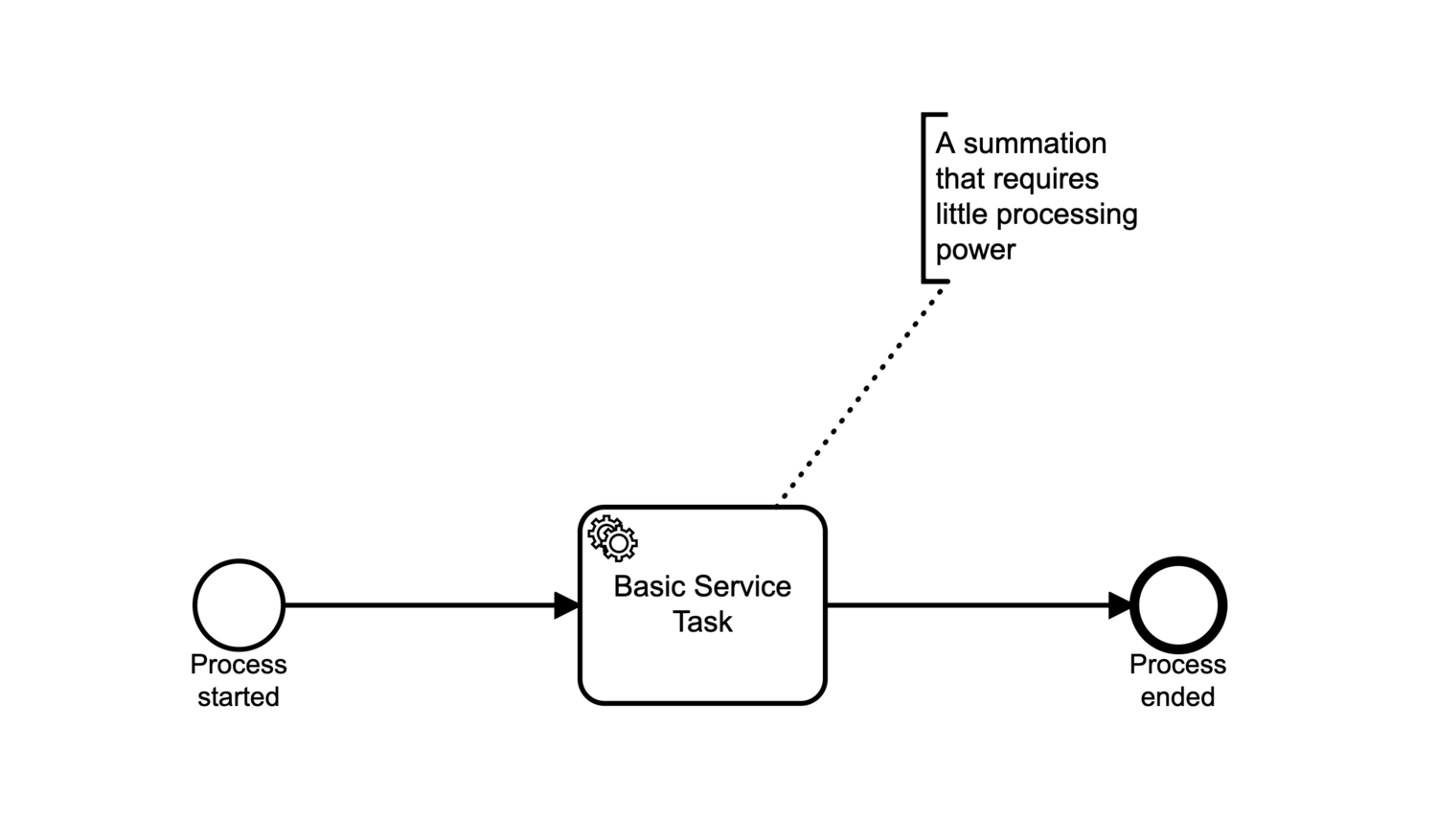

Basic Task: Summation

This is as simple as evaluating a 1 + 1 statement, and assigning it to a variable. In Java this would look like this:

int summed = 1 + 1;

The associated BPMN diagram looks like the below.

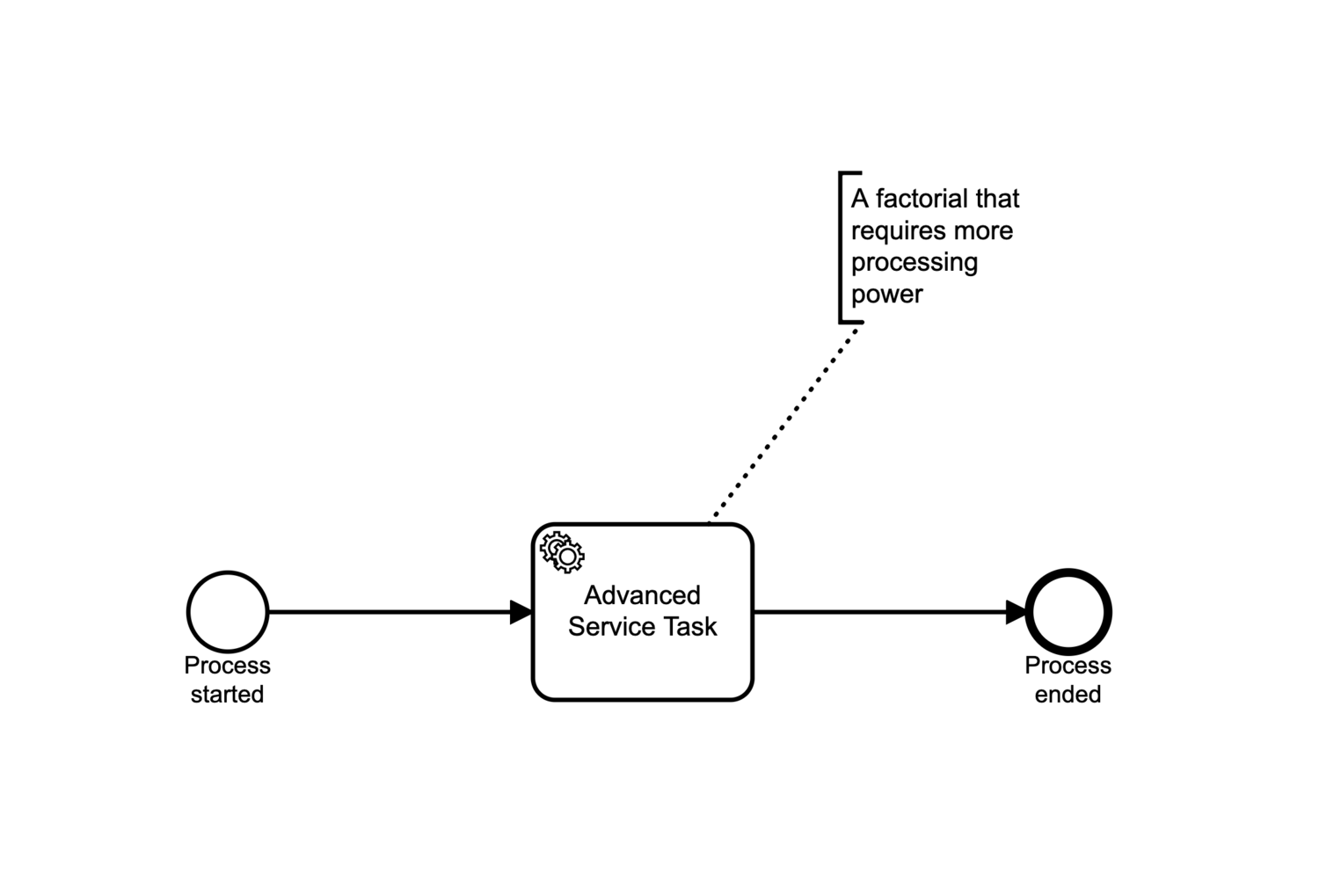

Advanced Task: Looped Factorial

In this task we try to increase the processing load and hence processing time taken by a task. This serves to mimic a long-running process that hogs up a lot of computing resources.

To this end we implement a factorial of 20, and loop it 10,000,000 times. In Java this would look like this:

private int factorial(int n) {

if (n == 0) {

return 1;

}

return n * factorial(n - 1);

}

for (int i = 0; i < 10000000; i++) {

factorial(20);

}

The associated BPMN diagram looks like the below.

Other parameters

The above two tasks are implemented firstly in a Java Class, and secondly in a NodeJS External Task Client. For the experiment we also run 2 variants, the first with a single worker, and the second with two workers.

We also test concurrencies of 1, 10 and 100 hits per second, for 60 seconds each.

Equipment and Environment

For this experiment we use my own computing device, a MacBook Pro 2019 with the following specs:

- 2.6 GHz 6-Core Intel Core i7

- 2 GB 2667 MHz DDR4

You can find the code for this experiment here.

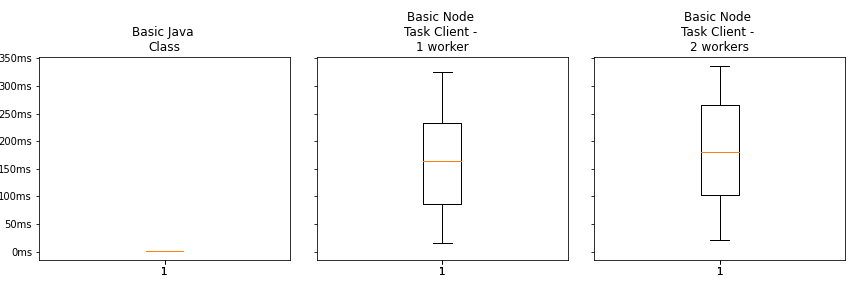

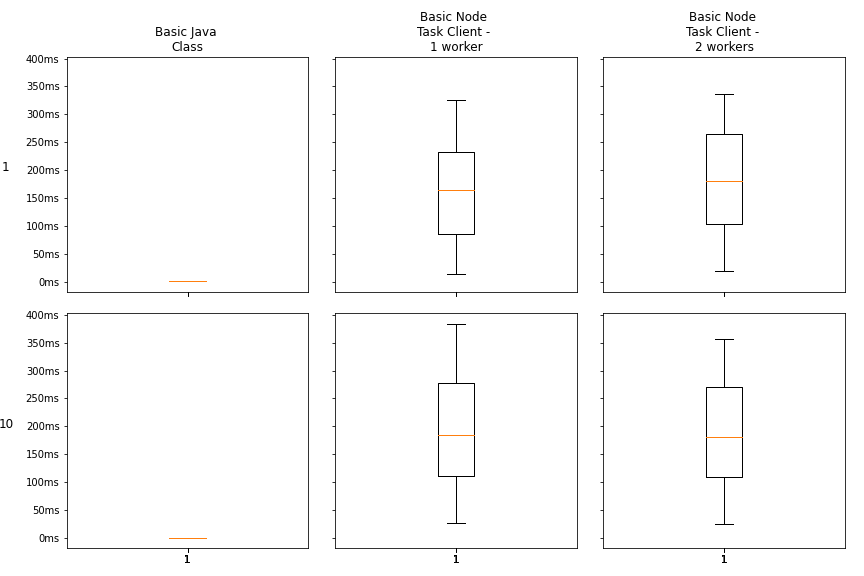

1. Basic Tasks, 1 concurrent

We start by comparing the results of running only the basic tasks, with the different service task implementations. We do this by looking at the durations taken per Camunda process from start to end, and visualise the aggregated results using a boxplot. Each of these runs go at 1 concurrent process start per second, for a total of 60 hits over 60 seconds.

Here we can see a stark difference between duration of the Java Class approach vs the external task client approach. It is too small to see here in the diagram, but the Java Class approach averaged ~4 ms per process, while the Node Task Client averaged roughly 150 ms per process. That’s a 37.5 times improvement of the Java Class over the Node Task Client.

Pretty impressive stuff.

What we see here could potentially be the effect of latency hits due to task clients’ use of long polling, and/or a performance difference between Java and NodeJS.

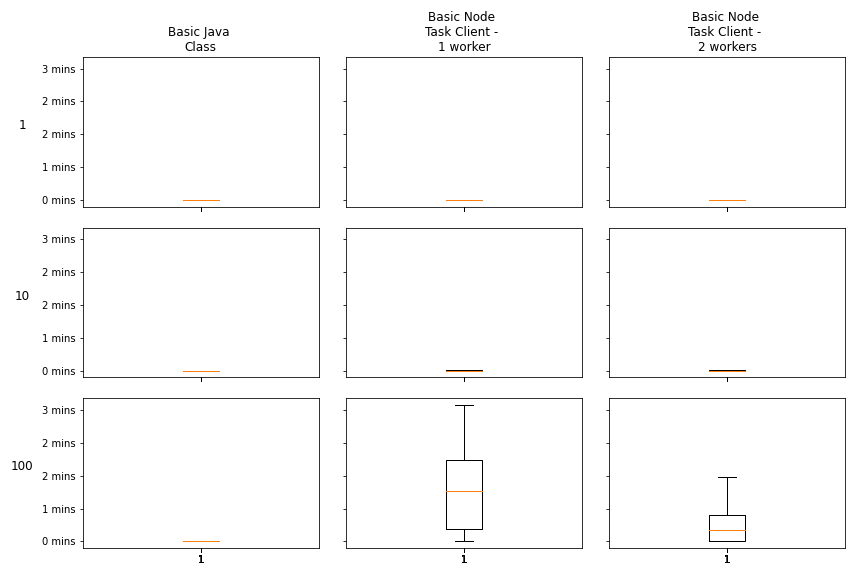

2. Basic Tasks, Introducing Concurrency

We start by upping the ante, and running 10 concurrent starts per second.

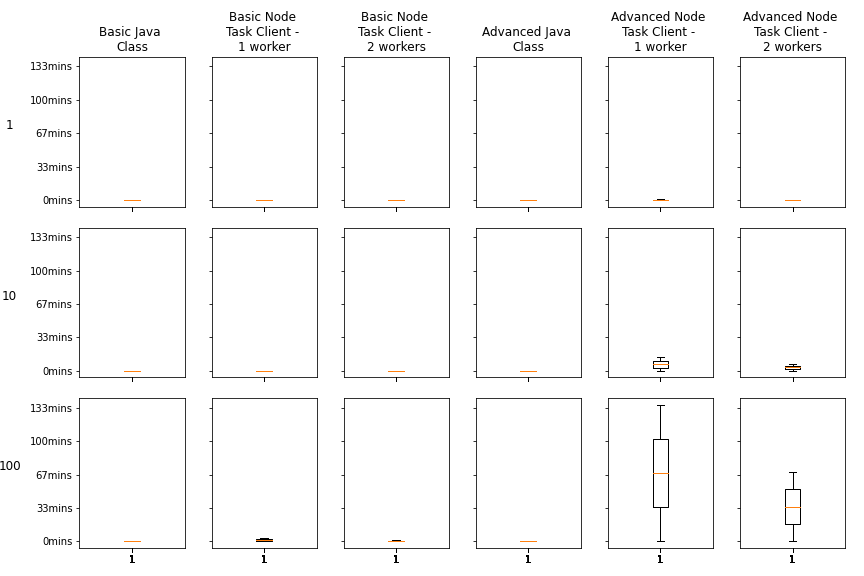

Note that in the results that follow the y-axis (left) represent the number of concurrent process starts per second, and the x-axis (top) represent the combination of type of task and service task implementation.

As observed before, the same pattern holds: there is a significant performance hit when comparing the Java Class approach versus a NodeJS task client approach.

But bumping the concurrencies from 1 to 10 doesn’t lead to much of a big difference.

Let’s push the envelope, and introduce concurrencies of 100 hits per second.

Suddenly, we see the external client approach getting crushed. Some possible reasons why:

- This could be the result of the throttling of max connections that Camunda or the Tomcat Server (which Camunda Docker) handles by default, because tasks clog up connection limits.

- NodeJS is single threaded for non-IO operations, while it is possible that Camunda implements and handle multithreading within the engine itself.

Something interesting: process time hits can be offset to a certain extent by using multiple task workers. Which lends more credence to the idea that the single-threaded nature of NodeJS might be contributing to the throttling (hence adding more workers = adding more threads, reducing loads).

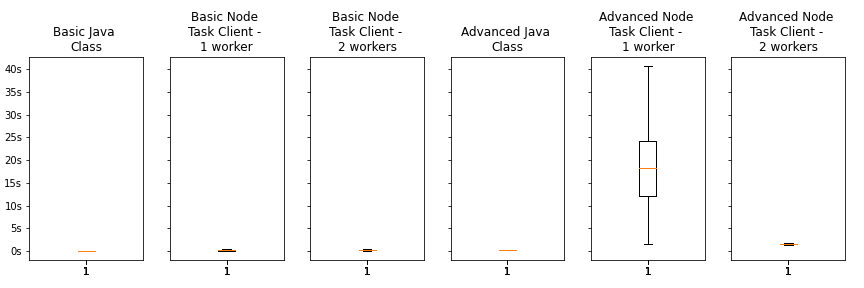

3. With Advanced Tasks, 1 concurrent

Here we run a computationally intensive process, instead of the simple summation.

The result is that the Advanced Node Task Client Single worker dominates the scale - it is clearly unable to handle even low concurrencies for computationally intensive tasks when using a single worker. But adding a second task worker brings it all the way back down, so this could be the result of NodeJS' single threaded nature.

Interestingly, when the advanced task is tested on its own in my NodeJS interpreter, it takes longer than 1 second to run, but not more than 2 seconds.

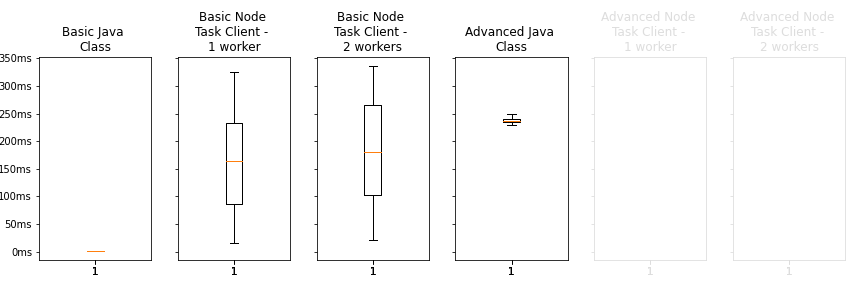

Let’s remove Advanced Node Task Client Single and Double workers, because the advanced tasks dominate the scale.

Something interesting to note here – The Java Class approaches have a much smaller spread than the NodeJS counterparts.

4. More concurrencies

We also ran the concurrency tests for the advanced task clients.

An hour on average for the computationally intensive tasks on the NodeJS worker! Just incredible.

Of all the options available, the Java Class approach continues to scale well.

Conclusions

The Java Class approach crushes base. Possibly because it is “native” to the Camunda engine, being compiled together with Camunda and directly called in the Camunda Engine.

This can mean a tight coupling between service implementations and Camunda itself, but the performance gains are significantly better.

However, if Java is not in frequent use within the organisation, and there is a compelling need to control technical diversity, it is still possible to utilise task clients. This can be the case if

- Longer latencies are acceptable. After all, different scenarios have different SLAs, and speed is not always the most important factor.

- There is the possibility of utilising multiple task clients to ease bottlenecks, in the event of performance hits.

If these are acceptable conditions, task clients can make the application more scalable, because processing is offloaded from the main Camunda Engine. It also serves the benefit of making the architecture more modular, which can be a big win for complexity management.

Any other tradeoff considerations that could be worth looking at? Drop me a note in the comments below!