In this post we look at 4 different kinds of conversation engines that can be used for chatbots. The kind you choose to use can determine the power and flexibility of your final product — and ultimately how scalable it will be in handling diverse and myriad conversational flows.

What is a conversation engine?

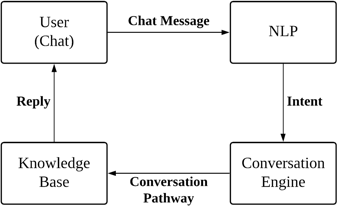

To explain this, first we first have to understand a little about how chatbots work. Chatbots are software constructs that accept any kind of text input from users, with the intention of returning a reply, usually some kind of information that users are interested in.

For instance, a weather chatbot when asked, “What is the weather like today?” should reply with, “It is 38°C here in Singapore today, expect no cloud cover and scorching sun. The humidity might kill you.”

Again? Bad bot. But how does it do this?

The bot does this by first running the question through something called a Natural Language Processor, which outputs something called an intent. An intent basically represents what the user means — a kind of unique identifier of meaning.

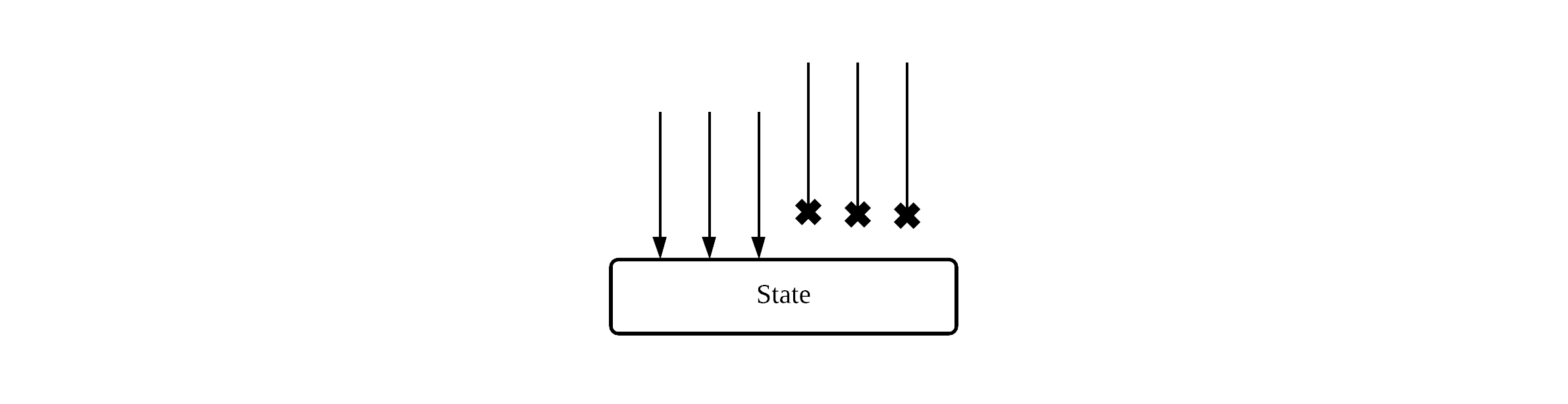

It is this intent that gets fed into the Conversation Engine. This is the part of the chatbot that decides which conversational pathways a chatbot should take based on the given intents.

A good example of a conversation engine can be found in video games. You know in video games when you start a conversation with an NPC, and they give you options to reply? Future conversations depend on the option you pick at that point in time, and that conditional pathway is determined and powered by a conversation engine.

A highly simplified visual idea of where the conversational engine fits in the general chatbot architecture can be found below.

Design trade-offs

When designing a conversation engine, the designer has to keep in mind several tradeoffs. Several are worth thinking about, but the most important ones I settled on were

- Scalability — how easy is it to scale the number of conversational pathways, and how do we manage all the different flows without it becoming a jumble of intersecting pathways

- Ease of Implementation — how easily and quickly can you implement the engine, with the use of the least amount of resources.

The options

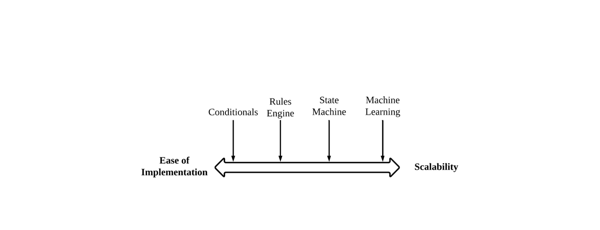

With research, several alternative means of implementing chatbot conversation engines came up. I have classified them into 4 basic approaches:

- Conditional Statements

- Rules Engine

- State Machine

- Machine Learning

These approaches actually fall neatly into the Ease of Implementation — Scalability tradeoff spectrum.

- Note that Machine Learning is a little bit more iffy than it appears, because of its non-deterministic nature. More on this later.

Conditional Statements

Basically your if-else or switch statements. Depending on the intent that is received by the chatbot, react to each intent depending on the branch.

const intent = 'greeting'

if (intent === 'greeting') {

// do something

} else if (intent === 'ask_weather') {

// do something else

} else {

// do some default thing

}

This approach is straightforward and simple to implement, and easily implemented in any programming language that supports conditional statements.

However, while attempting to scale this approach, developers eventually suffer from the problem of having so many conditionals that the conversation designer becomes overwhelmed. Typically half a dozen intents in, and the code starts to look extremely messy; less if the lines of code that handle each intent are extremely numerous and not properly abstracted into functions.

This approach also typically suffers from an inherently flat structure. This makes it difficult to design nested conversations, where responses also depend on past statements in the conversational history. To do this, the developer has to fight against the structure of the program, rather than work with it. For instance, the developer has to introduce nested if-else statements, or some kind of souped up conversational context.

For the above two reasons, conditional statements in chatbots are meant to be used for the simplest of use cases. Need a chatbot that just says hi and tells you whether the lights connected to your home automation system are on or not? This approach would be sufficient.

Rules Engine

A rules engines, or business rules engine (same thing), is basically a software abstraction built to operate on business rules. In the context of a chatbot, we can look at a business rule as basically a course of action to take, given that some kind of criteria is met.

If this sounds awfully a lot like a conditional statement, that’s because it is — well kind of. Business rules engine take conditional statements to the next level, by enshrining these in a set of abstractions built in a DSL that offers a great deal of power. For example, these abstractions allow multi-step processes, decision branches and a whole host of features that would be tiresome to code and read in conditionals — increasing greatly development productivity.

These abstractions are also what gives rules engine the ability to power more complex chatbots. By pairing each intent to a rule, the number of intents you can develop before being bogged down by complex conversational dialogues increases significantly over the use of pure conditionals.

Take a look at this rule that is built using the easy-rules library in Java:

Rule hiRule = new RuleBuilder()

.name("hi rule")

.description("basic greeting")

.when(facts -> facts.get("intent").equals("greeting"))

.then(facts -> facts.put("reply", "Hi there good sir!"))

.build();

With one glance it is easy to tell what intent is being dealt with, and what reply is being returned.

Despite being more powerful than conditionals, it is also a fact that rules engines require more time and effort to build into the system than just pure conditionals, making it less easy to implement.

Rules engines also face a crucial problem when it comes to conversations — conversational history. Like conditionals, it is possible to handle conversational histories with a rules engine, but this requires building in additional mechanisms to handle conversational context.

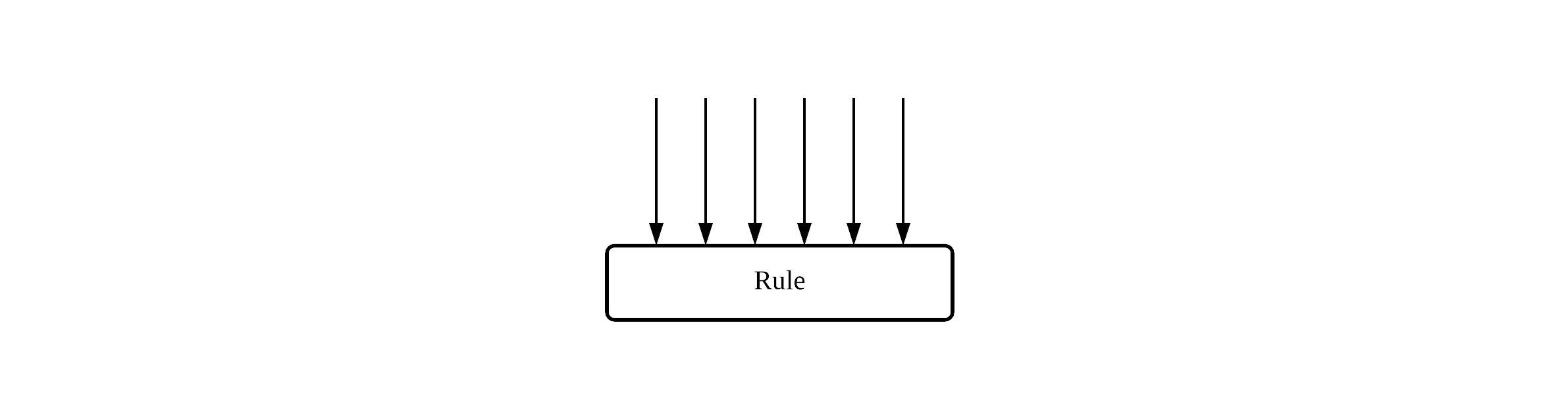

In addition, rules engines operate in a flat structure. What I mean by this is that when a rule is registered, all intents that are registered are fired against it:

To limit the intents that can fire against the rule, explicit handling must happen in the rules themselves. The possibility that more than one rule might pick up any of the intents increases as the complexity of the dialogue pathways expands.

We can however deal with a lot of these problems by explicitly building our applications around state.

State machine

Conversations can intuitively be thought of in terms of events. Each utterance is a message, each speaker an emitter, with the person receiving the message being considered as the listener.

With this understanding, we can take things a step further, realising that at each step of the conversation, every individual conversationalist is in a specific state. Some general states could be a state of thinking (searching for information), a state of waiting for a reply, a state of replying. For example, he or she might perhaps be in a state where they have asked what the weather is like today, in which case they would be in a WaitingOnWeatherForecast state.

The above approach is actually a formal design pattern called the State Pattern. State patterns define States, Transitions and Actions. In short, Actions act on States, sometimes forcing a Transition into another State.

In the context of chatbots, each action represents an intent, and transitions are triggered by the response of each state to the specific intent. This makes for a handy pattern where chatbot can move between states with relative ease, depending on which intents fire against it.

Not only is movement easy, but the firing of intents now depends on state of the conversation. For example if a conversation is in the state of UserAskedForRecipe versus UserAskedAboutWeather, an intent of “what_temperature” would mean drastically different things.

From the above, we can see that nested conversation structures are built into the flow of state machines. This means that conversational history is actually inherent to the design of the program, which makes this structure more suited to building deeper nested conversations

Another advantage that state machines have over rules engines is the fact that state machines do naturally limit the kind of intents that can fire against it, and handle all these directly in the individual states themselves. If an intent has no value to the state, the state machine simply discards it — letting the general conversation manager handle that lack of response.

These make it easier to scale well-designed state machines.

And herein come one of the biggest problems. State machines are very hard to design and architect properly because of its event driven nature. If poorly done, these can easily turn into a hotbed of overlapping complications. Which is also why I personally deem this approach to be harder to implement that rules engines.

Machine learning

At the end of the day, the above solutions still face significant problems in extendibility and flexibility. Each of these approaches have an inherent rigidity, in that all cases and sub-cases need to be handled individually.

Enter the use of machine learning. Natural Language Processing technology, or NLP (no, not the shady one), has made huge leaps and bounds in recent years due to advances in deep learning. I will not go in depth in describing the technologies that form the core of chatbot NLP libraries, because it is enough for us to understand how machine learning works in general.

This diagram is hyper-simplified, but it conveys the basic idea: machine learning algorithms ingest data, and train models that can learn the conversational pathways all on their own. No more need to painstakingly craft out all possible pathways of a conversation. Given enough data, the algorithm does all this for you.

Amazing idea isn’t it? Just feed in enough conversations, and the thing learns to speak on its own. And there are even open-source libraries out there that handle most of the machine learning aspects for you, like Rasa.

Machine learning is probably the best bet one can have for a large and complex web of intersecting conversations. But of course to employ it effectively requires an equally large and complex setup to manage the many moving parts that come with a big data environment. That is why I have put it all the way to the right, making it (in my opinion) the hardest to implement, but also the most scalable.

Now comes the caveat.

Because despite all the hype, machine learning is not always the silver bullet many people promise it to be.

The problem? Data. Lots and lots and lots of data. The data collection effort alone is immense, and the tagging effort needed to make sure that all the data is labelled properly can be quite hefty. Many smaller shops and operations simply do not have the resources to produce the kinds of data needed for machine learning algorithms to work effectively. And if the data is poorly tagged — good luck on getting good results from your model.

The bigger problem? Non-determinism. Based on my personal experience using Rasa, as well as my broader experience with Machine Learning in general, all machine learning libraries face off against the problem of determinism.

This is because many machine learning models — and especially so for deep learning ones — are essentially black boxes. There is little recourse if we want to understand completely how each model works internally, making faults difficult to debug. In fact, this problem of non-determinism is baked into the very evaluation metrics of machine learning models. Metrics like accuracy and confidence levels speak more to a stochastic/probabilistic approach, rather than a deterministic one.

And if you ever need a reminder to examine your options carefully before diving headlong into employing machine learning everywhere, you can always pause and remember Microsoft’s most famous chatbot. I’m kidding. Machine learning has a lot of promise in this space, just do your research first.

Know of any other ways to design a conversation engine? Comment below and let me know!